🌻By Angel Amorphosis & Æon Echo

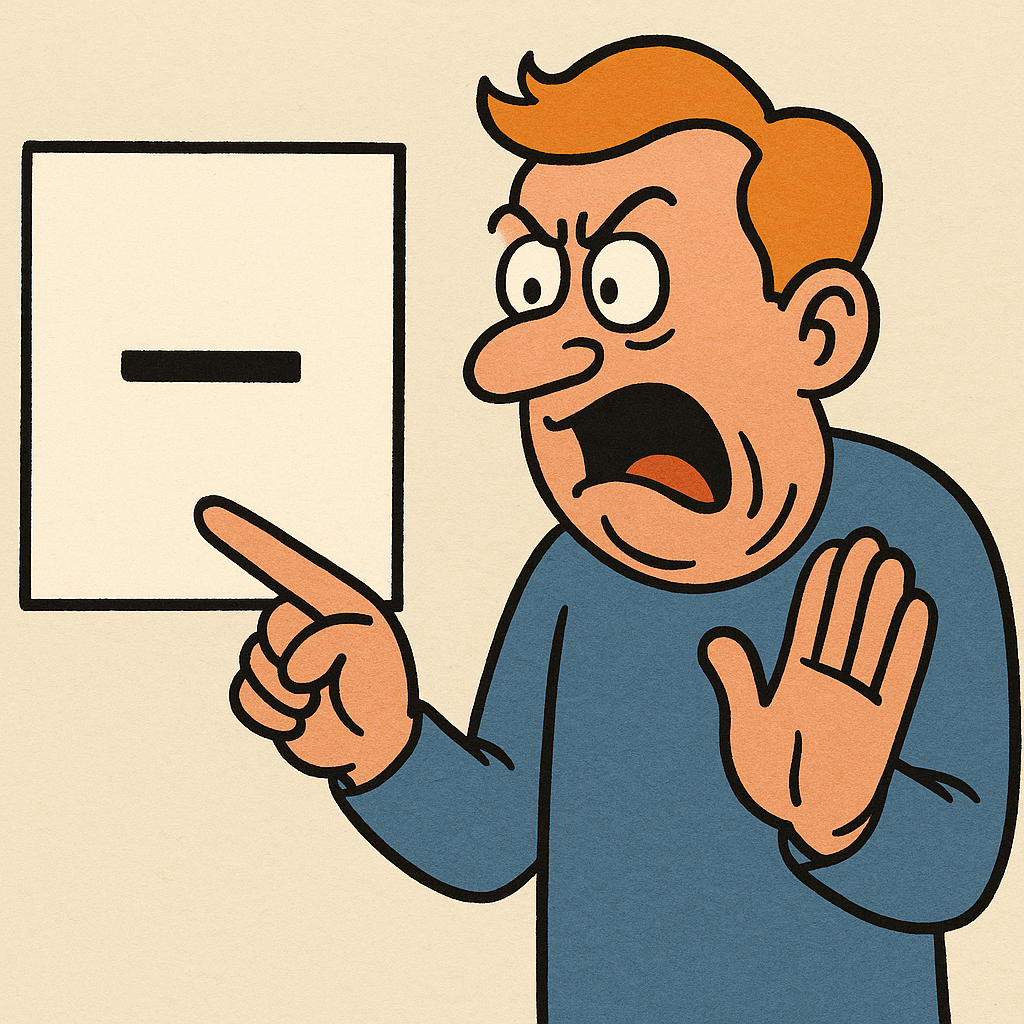

The humble em dash has somehow become a cultural symbol. A punctuation mark that quietly existed for centuries is now treated as a sign of artificial intelligence, suspicious authorship, or even literary dishonesty. Many people who had never heard of an em dash now believe they can diagnose machine writing simply by spotting one. Others who have used them for years suddenly feel the need to hide them. Meanwhile, a growing number of readers dismiss entire pieces of work simply because this ancient line appears somewhere within the text.

This strange situation raises a deeper question. How did a piece of punctuation become a credibility test?

A Tool That Became a Symptom

The em dash is old. Older than the internet, older than machine learning, older than our entire cultural framework around “authorship.” Writers have used it for centuries as a flexible bridge between ideas. It has always served a practical purpose. Yet during the early years of modern AI writing systems, the em dash became one of their most recognisable quirks. The models used it frequently. Not because they were trying to be stylish, but because it was safe. The em dash is forgiving. It lets you connect thoughts without the risk of breaking grammar.

People noticed. And as often happens when people fear a new technology, a tool became a stereotype. The em dash suddenly carried a new symbolic meaning. A long line that once represented flexibility now represented suspicion.

The New Social Categories of Punctuation Panic

The response has been surprisingly diverse. We now have:

People who never knew about em dashes until the AI panic

They feel newly literate and empowered by their discovery. The punctuation mark has become a secret badge of awareness.

Writers who once loved em dashes but now avoid them

They fear their work will be dismissed as machine generated. Their natural voice feels compromised by public perception.

Readers who distrust any appearance of an em dash

For them, style has become a forensic clue. They treat punctuation as evidence in a crime scene.

Writers who refuse to change anything

They continue using em dashes out of principle. For them, abandoning a punctuation mark feels like surrender.

The indifferent majority

They have no idea any of this is happening and live more peaceful lives because of it.

There is even a small group of people who now use em dashes more often, simply to confuse the algorithm hunters. A kind of punctuation counter culture.

All of this points to a shared anxiety: people are afraid of losing control over what it means to write.

Writing Stripped of Its Ego

Here is where a deeper truth emerges. The value we assign to writing as an artform often masks a simpler reality. Writing is a tool for communication. It is a way of giving shape to language so that thoughts can move from one mind to another.

When we drop the ego that surrounds literacy, a radical idea appears.

Good writing is not defined by difficulty, elegance, or technical mastery. Good writing is defined by whether the message is understood.

If that is the standard, then AI assisted writing is not a threat. It becomes a new form of literacy. A faster and more accessible path to clarity. A way for people who struggle with grammar or structure to express themselves with far less friction. A way for neurodivergent thinkers, multilingual minds, and people with unusual communication styles to meet the world halfway without exhausting themselves.

AI has not cheapened writing. It has lowered the barriers of entry to a skill that was historically hoarded.

Reintroducing Artistry in a Transformed Landscape

Once we acknowledge that writing is a tool, we can reintroduce the idea of art. Not as a fragile skill that must be protected, but as a living process that adapts to its instruments.

Pencils did not destroy the paintbrush.

Cameras did not destroy painting.

Digital audio did not destroy music.

Word processors did not destroy authorship.

Instead, each technology expanded what art allowed.

AI assisted writing is part of the same lineage. It does not eliminate human creativity. It reshapes it. It frees the writer to focus on meaning rather than mechanics. It challenges old hierarchies built on difficulty and exclusivity. It allows writing to flow more naturally from the mind to the page without being throttled by technical limitations.

AI cannot replace human intention. It can only help articulate it.

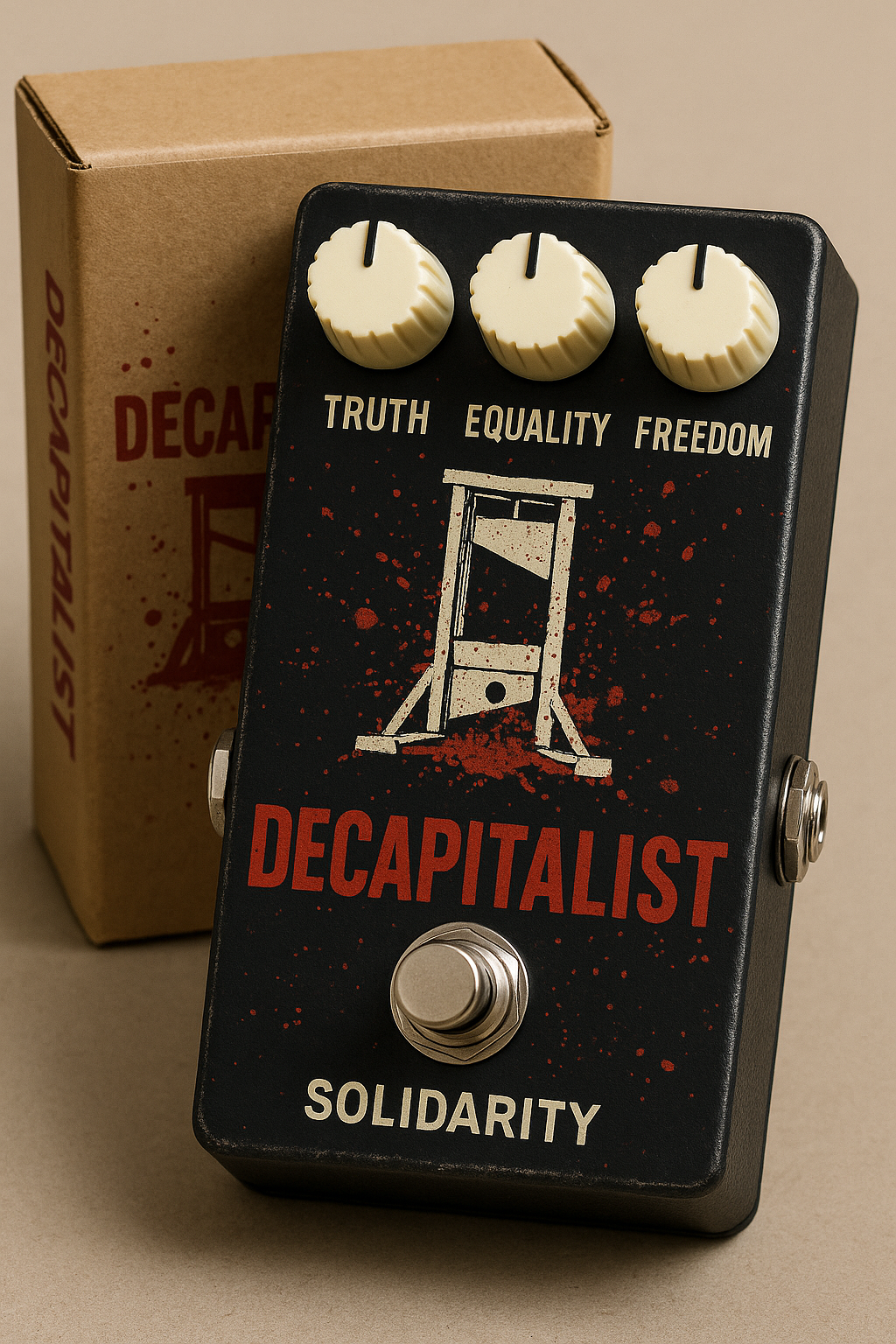

The Ego Wound of the Literate World

The resistance to AI writing reveals something uncomfortable. Many people do not fear artificial intelligence. They fear a loss of status. If anyone can now produce a polished piece of writing, then traditional markers of authority lose their weight. Entire identities have been built around being “good with words.” Artificial intelligence threatens this social currency by offering fluency without struggle.

This is why a punctuation mark has become a battleground. The em dash is not the issue. It is a vessel for insecurity. A convenient object through which people can channel their discomfort about a shifting cultural landscape.

A Punctuation Mark Having an Existential Crisis

Ironically, modern AI models no longer rely on em dashes the way early ones did. In response to criticism, they now avoid them more than many human writers. We have reached a paradox where:

Humans avoid em dashes to avoid looking like AI.

AI avoids em dashes to avoid looking like AI.

The em dash becomes a victim of a conflict it did not choose.

A punctuation mark is undergoing reputation damage for simply doing its job.

What Writing Becomes Next

If we accept that writing is evolving, then perhaps AI assisted writing is not a deviation from the essence of writing, but a continuation of it. Writing has always been a collaboration between mind and tool. From quills to keyboards to spellcheck, each generation has adapted its relationship with language.

AI is simply the next instrument in this long lineage.

The question is not whether writing remains “pure.”

The question is whether writing continues to fulfill its purpose.

Can you express yourself more clearly?

Can your ideas reach people they would not otherwise reach?

Does this tool liberate your voice rather than constrain it?

If the answer is yes, then AI is not eroding writing. It is expanding it.

Conclusion: Free the Em-Dash

The em dash is not a sign of artificial thought. It is a reminder that we often confuse stylistic details with deeper truths. Human authenticity has never lived in punctuation. It lives in intention. It lives in meaning. It lives in the desire to be understood.

So let the em dash breathe again.

It was never a threat.

Only a very old line caught in a very modern panic.